AI: From Fun to Violations

- Details

- Written by Will from Holland

- Category: Articles

The increasing use of artificial intelligence (AI) and technological innovations are happening faster than us people can keep up with.

Since the arrival of the AI bot ChatGPT, almost every tech company has been using artificial intelligence.

As a result, we are lagging in recognizing risks associated with AI. And we're having trouble tackling it around regulations and enforcement.

Since the arrival of the AI bot ChatGPT, almost every tech company has been using artificial intelligence. But AI is nothing more than a bunch of algorithms giving you answers to the questions you put in. In short: As soon as an algorithm can draw conclusions or generate something new, we call it AI.

And that can be a Pandora's Box: You put certain data in the box, but you have no insight into what is done with the data in that box. When a conclusion emerges, or new data rolls out, you have no idea how that came about.

Risk of privacy violation, discrimination, and disinformation

You can unleash algorithms and artificial intelligence on all the data you can find on the internet. For example, the location data of millions of people are available online. You could use AI to analyze who might be involved in criminal matters. If you're part of the database, that is a significant invasion of your privacy.

The risks of AI depend on the data with which it is trained, too. For example, if AI is used with non-representative training data, discrimination, and disinformation are right around the corner.

Take healthcare, for example. Traditionally, a lot of medical knowledge is based on men. If you train an AI model exclusively with this data, the outcome may not be reliable for children or women.

And AI is often trained with non-representative data

Generally, people must permit an AI model before it can be trained with their privacy-sensitive health data. It happens that certain groups of people give consent, while others don't.

As a result, certain groups of people may be overrepresented in the training data and other groups underrepresented. The result is that the data with which AI is trained is not representative, though AI won't tell you that.

We assume that computer decisions are neutral. However because the data is often unrepresentative, the information and conclusions generated can be incorrect and even discriminatory.

But it's not all bad… AI offers many great opportunities!

For instance, there are examples that AI can recognize cancer better than the human eye. But artificial intelligence will certainly not replace the doctor. AI can help make a good diagnosis more quickly and take over certain other tasks, which leaves the doctor time for more personal contact, or to help more patients. This can make healthcare more efficient and perhaps cheaper while ensuring more confidence in care. That makes sense, right?

And what about you?

Just have a look at what AI can do for you, in your personal or professional life. Below is an infographic with a variety of questions you can ask AI to make any task easier. Quite handy, huh?

And what can you do to be/stay safe with AI around?

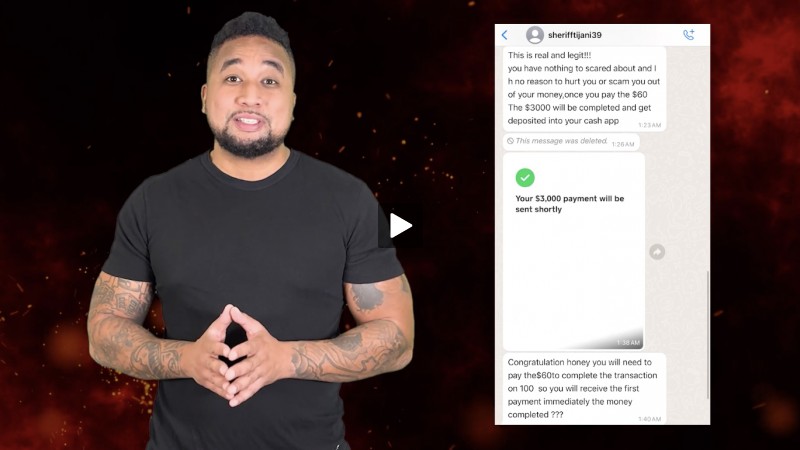

Be a healthy skeptic. Stop and think critically when it comes to any news you read, any ads you see, and messages or emails you receive. Always think: "What is the source, is this information plausible, and how will I fact check that?"

AI can make your life easier. As long as you have your guard up…